After reading Which is fastest: read, fread, ifstream or mmap?, I try to benchmark C++ ifstream and mmap myself.

The test file is number.txt, and the size is 4GiB:

# ls -alt number.txt

-rw-r--r-- 1 root root 4294967296 Apr 2 13:51 number.txt

The test_ifstream.cpp is like this:

#include <chrono>

#include <fstream>

#include <iostream>

#include <string>

#include <vector>

const std::string FILE_NAME = "number.txt";

const std::string RESULT_FILE_NAME = "result.txt";

char chunk[1048576];

int main(void)

{

std::ifstream ifs(FILE_NAME, std::ios_base::binary);

if (!ifs) {

std::cerr << "Error opeing " << FILE_NAME << std::endl;

exit(1);

}

std::ofstream ofs(RESULT_FILE_NAME, std::ios_base::binary);

if (!ofs) {

std::cerr << "Error opeing " << RESULT_FILE_NAME << std::endl;

exit(1);

}

std::vector<std::chrono::milliseconds> duration_vec(5);

for (std::vector<std::chrono::milliseconds>::size_type i = 0; i < duration_vec.size(); i++) {

unsigned long long res = 0;

ifs.seekg(0);

auto begin = std::chrono::system_clock::now();

for (size_t j = 0; j < 4096; j++) {

ifs.read(chunk, sizeof(chunk));

for (size_t k = 0; k < sizeof(chunk); k++) {

res += chunk[k];

}

}

ofs << res;

duration_vec[i] = std::chrono::duration_cast<std::chrono::milliseconds>(std::chrono::system_clock::now() - begin);

std::cout<< duration_vec[i].count() << std::endl;

}

std::chrono::milliseconds total_time{0};

for (auto const& v : duration_vec) {

total_time += v;

}

std::cout << "Average exec time: " << total_time.count() / duration_vec.size() << std::endl;

return 0;

}

The program reads 1MiB(1024 * 1024 = 1048576) every time (the total count is 4096). Use -O2 optimization:

# clang++ -O2 test_ifstream.cpp -o test_ifstream

# ./test_ifstream

57208

57085

57061

57105

57069

Average exec time: 57105

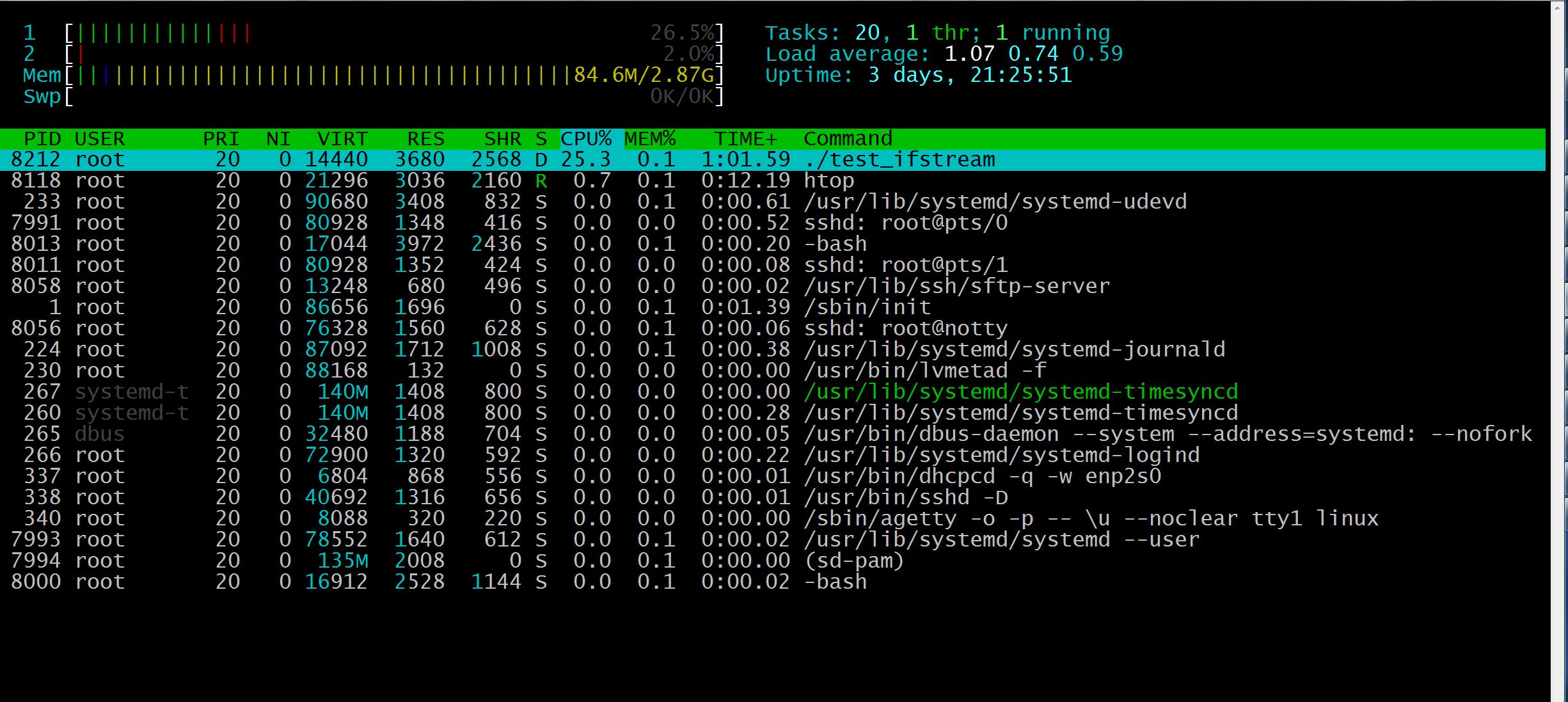

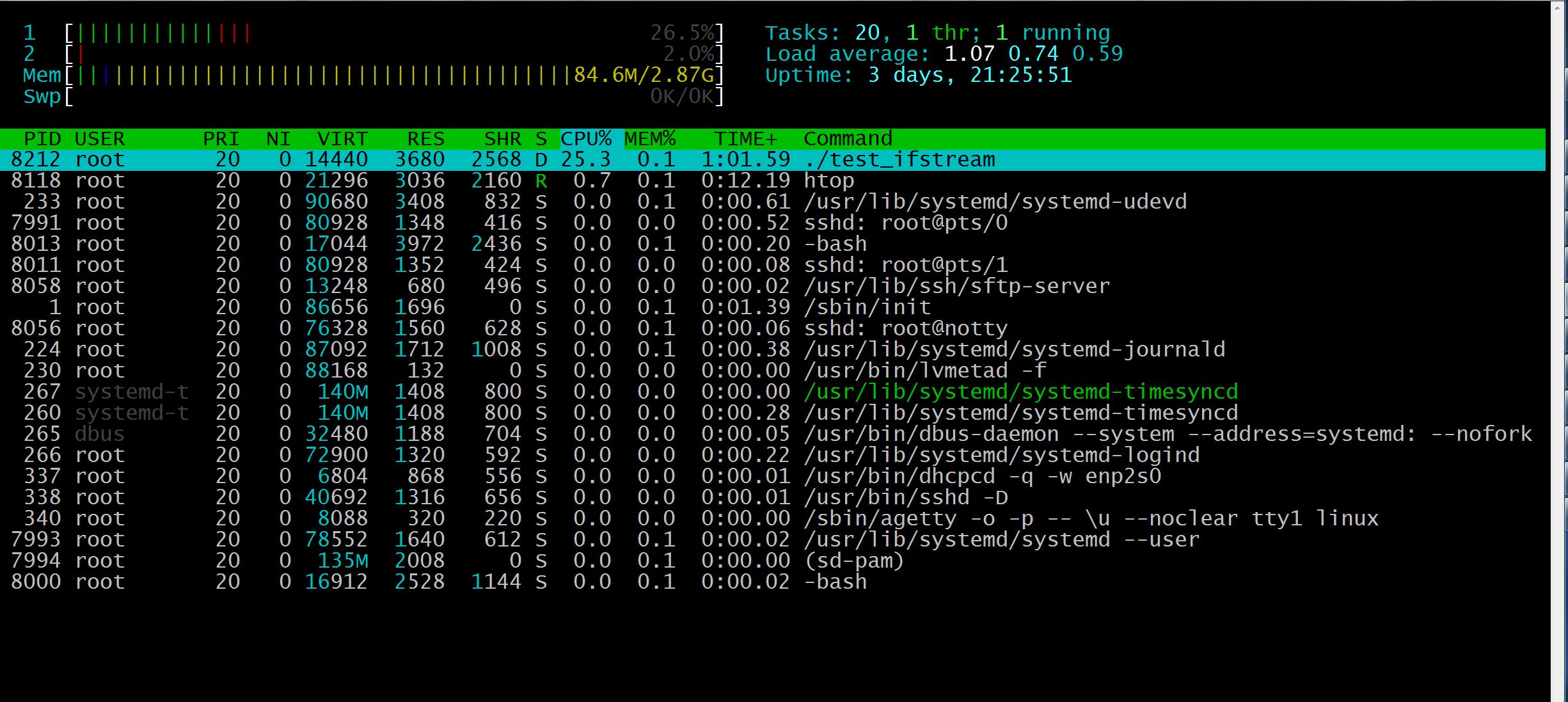

The average execution time is 57105 ms. From the htop output:

We can see test_ifstream occupies very little memory.

The following is test_mmap file:

#include <chrono>

#include <fstream>

#include <iostream>

#include <string>

#include <vector>

#include <sys/types.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <unistd.h>

#include <sys/mman.h>

const std::string FILE_NAME = "number.txt";

const std::string RESULT_FILE_NAME = "result.txt";

int main(void)

{

int fd = ::open(FILE_NAME.c_str(), O_RDONLY);

if (fd < 0) {

std::cerr << "Error opeing " << FILE_NAME << std::endl;

exit(1);

}

std::ofstream ofs(RESULT_FILE_NAME, std::ios_base::binary);

if (!ofs) {

std::cerr << "Error opeing " << RESULT_FILE_NAME << std::endl;

exit(1);

}

auto file_size = lseek(fd, 0, SEEK_END);

std::vector<std::chrono::milliseconds> duration_vec(5);

for (std::vector<std::chrono::milliseconds>::size_type i = 0; i < duration_vec.size(); i++) {

lseek(fd, 0, SEEK_SET);

unsigned long long res = 0;

auto begin = std::chrono::system_clock::now();

char *chunk = reinterpret_cast<char*>(mmap(NULL, file_size, PROT_READ, MAP_FILE | MAP_SHARED, fd, 0));

char *addr = chunk;

for (size_t j = 0; j < file_size; j++) {

res += *chunk++;

}

ofs << res;

munmap(addr, file_size);

duration_vec[i] = std::chrono::duration_cast<std::chrono::milliseconds>(std::chrono::system_clock::now() - begin);

std::cout<< duration_vec[i].count() << std::endl;

}

std::chrono::milliseconds total_time{0};

for (auto const& v : duration_vec) {

total_time += v;

}

std::cout << "Average exec time: " << total_time.count() / duration_vec.size() << std::endl;

::close(fd);

return 0;

}

Still use -O2 optimization:

# clang++ -O2 test_mmap.cpp -o test_mmap

# ./test_mmap

57241

57095

57038

57008

57175

Average read time: 57111

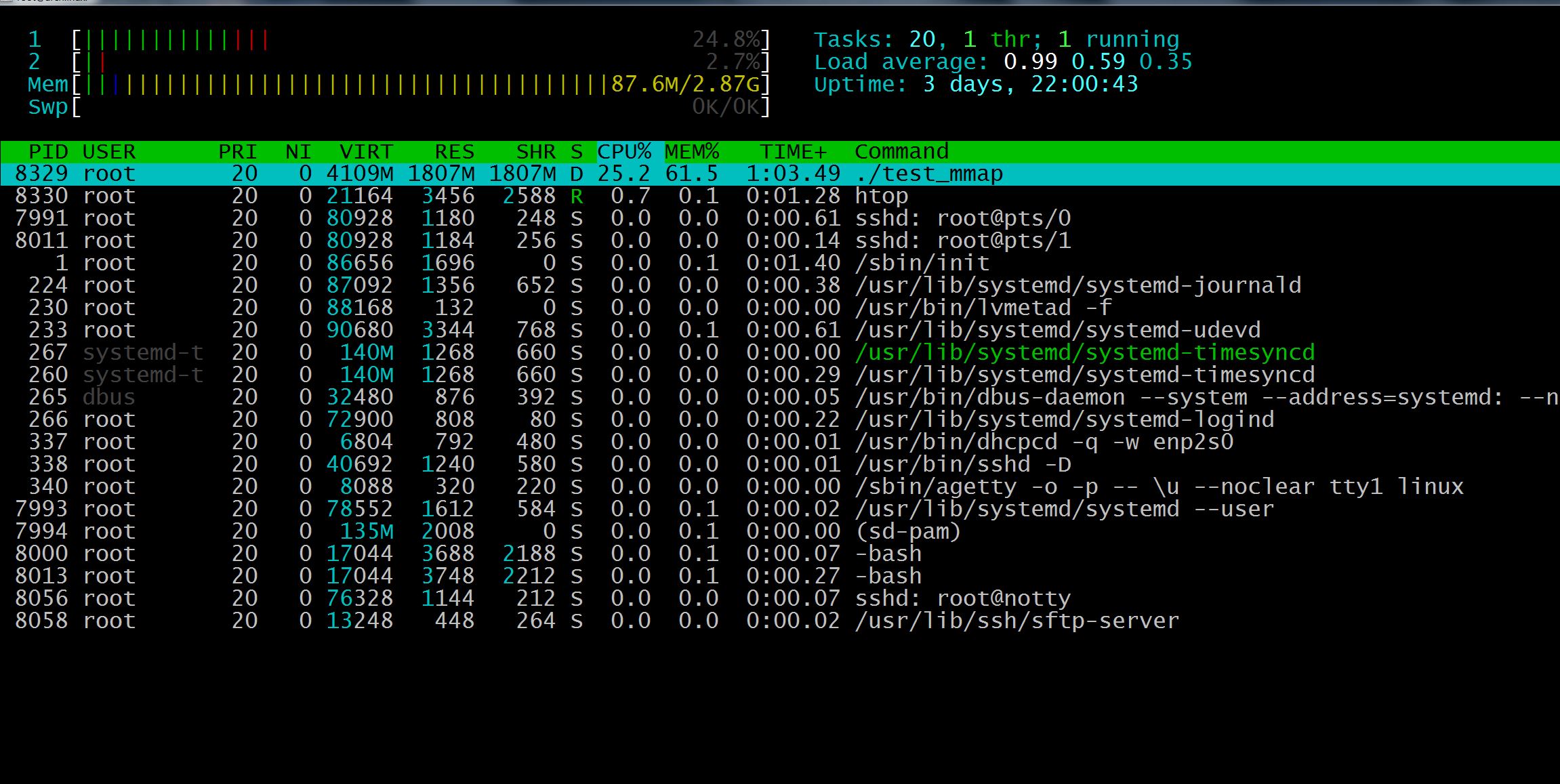

We can see the execution time of test_mmap is similar as test_ifstream, whereas test_mmap uses more memory: