stxxl is an interesting project:

STXXL is an implementation of the C++ standard template library STL for external memory (out-of-core) computations, i. e. STXXL implements containers and algorithms that can process huge volumes of data that only fit on disks. While the closeness to the STL supports ease of use and compatibility with existing applications, another design priority is high performance.

Recently, I have a task to do operation on data which can’t be stored in memory, so I want to give a shot of stxxl.

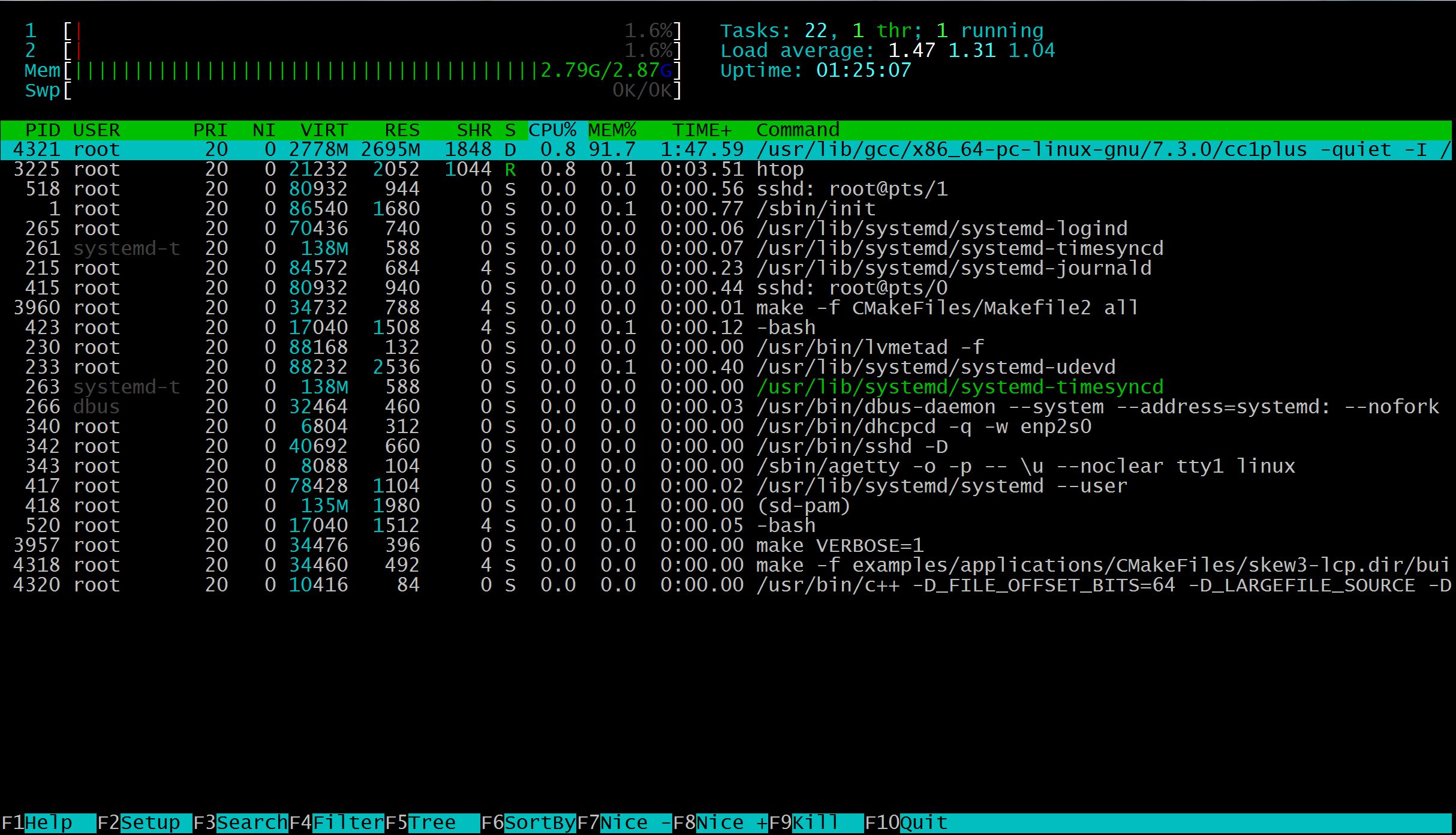

My OS is OpenBSD and the memory is less than 4G and I try to modify the vector1.cpp:

for (int i = 0; i < 1024 * 1024; i++)

to

for (int i = 0; i < 1024 * 1024 * 1024; i++)

Since every integer occupies 4 bytes, so it will require at least 4G storage to save the vector. Build and run this program:

# ./vector1

[STXXL-MSG] STXXL v1.4.99 (prerelease/Debug) (git 263df0c54dc168212d1c7620e3c10c93791c9c29)

[STXXL-ERRMSG] Warning: no config file found.

[STXXL-ERRMSG] Using default disk configuration.

[STXXL-MSG] Warning: open()ing /var/tmp/stxxl without DIRECT mode, as the system does not support it.

[STXXL-MSG] Disk '/var/tmp/stxxl' is allocated, space: 1000 MiB, I/O implementation: syscall delete_on_exit queue=0 devid=0

[STXXL-ERRMSG] External memory block allocation error: 2097152 bytes requested, 0 bytes free. Trying to extend the external memory space...

[STXXL-ERRMSG] External memory block allocation error: 2097152 bytes requested, 0 bytes free. Trying to extend the external memory space...

[STXXL-ERRMSG] External memory block allocation error: 2097152 bytes requested, 0 bytes free. Trying to extend the external memory space...

[STXXL-ERRMSG] External memory block allocation error: 2097152 bytes requested, 0 bytes free. Trying to extend the external memory space...

......

[STXXL-ERRMSG] External memory block allocation error: 2097152 bytes requested, 0 bytes free. Trying to extend the external memory space...

101

[STXXL-ERRMSG] Removing disk file: /var/tmp/stxxl

The program outputs 101 which is correct result. Check /var/tmp/stxxl before it is deleted:

# ls -alt /var/tmp/stxxl

-rw-r----- 1 root wheel 4294967296 Mar 9 15:58 /var/tmp/stxxl

It is indeed the data file and size is 4G.

Based on this simple test, stxxl gives me a good impression, and is worthy for further exploring.

P.S., use cmake -DBUILD_TESTS=ON .. to enable building the examples.