Void Linux wiki上的一个页面(Rosetta stone),很好地总结了Linux不同发行版的包管理工具。其实从一个发行版换到另外一个,很重要的一点就是熟悉和适应新的包管理工具。这张表很有帮助!

VAX

VAX是一种古老的32位计算机处理器,基于VAX的第一款计算机发布于1977年。在2000年左右,VAX计算机退出历史舞台。随着OpenBSD从6.0版本放弃对VAX的支持,目前主流操作系统似乎只有NetBSD还继续支持VAX。但是即便有操作系统,许多主流软件和编程语言也无法在VAX上运行。那么除了怀旧,现在的VAX还有其它的意义吗?

实际上VAX可以做测试平台。VAX支持C语言以及一些还不算“太老”的C语言编译器。如果你是一名C程序员,并且你的C代码不依赖于32位或64位处理器,你又恰巧有一台VAX古董计算机。你可以尝试着让你的代码在VAX上运行一下,说不定可以找到一些隐藏很深的bug。:-)

如果你想更多地了解VAX,可以参考下列文档:

VAX wikipeia;

Modern BSD Computing for Fun on a VAX! Trying to use a VAX in today’s world;

My beautiful VAX 4000。

如何度量代码运行了多少时钟周期(X86平台)

Daniel Lemire的benchmark代码展示了在X86平台上,如何度量一段代码运行了多少时钟周期:

......

#define RDTSC_START(cycles) \

do { \

unsigned cyc_high, cyc_low; \

__asm volatile("cpuid\n\t" \

"rdtsc\n\t" \

"mov %%edx, %0\n\t" \

"mov %%eax, %1\n\t" \

: "=r"(cyc_high), "=r"(cyc_low)::"%rax", "%rbx", "%rcx", \

"%rdx"); \

(cycles) = ((uint64_t)cyc_high << 32) | cyc_low; \

} while (0)

#define RDTSC_FINAL(cycles) \

do { \

unsigned cyc_high, cyc_low; \

__asm volatile("rdtscp\n\t" \

"mov %%edx, %0\n\t" \

"mov %%eax, %1\n\t" \

"cpuid\n\t" \

: "=r"(cyc_high), "=r"(cyc_low)::"%rax", "%rbx", "%rcx", \

"%rdx"); \

(cycles) = ((uint64_t)cyc_high << 32) | cyc_low; \

} while (0)

......

RDTSC_START(cycles_start);

......

RDTSC_FINAL(cycles_final);

cycles_diff = (cycles_final - cycles_start);

......

这段代码其实参考自How to Benchmark Code Execution Times on Intel® IA-32 and IA-64 Instruction Set Architectures,原理如下:

(1)测量开始和结束时的cpuid指令用来防止代码的乱序执行(out-of-order),即保证cpuid之前的指令不会调度到cpuid之后执行,因此两个cpuid指令之间只包含要度量的代码,没有掺杂其它的。

(2)rdtsc和rdtscp都是读取系统自启动以来的时钟周期数(cycles,高32位保存在edx寄存器,低32位保存在eax寄存器),并且rdtscp保证其之前的代码都已经完成。两次采样值相减就是我们需要的时钟周期数。

综上所述,通过cpuid,rdtsc和rdtscp这3条汇编指令,我们就可以计算出一段代码到底消耗了多少时钟周期。

P.S.,stackoverflow也有相关的讨论。

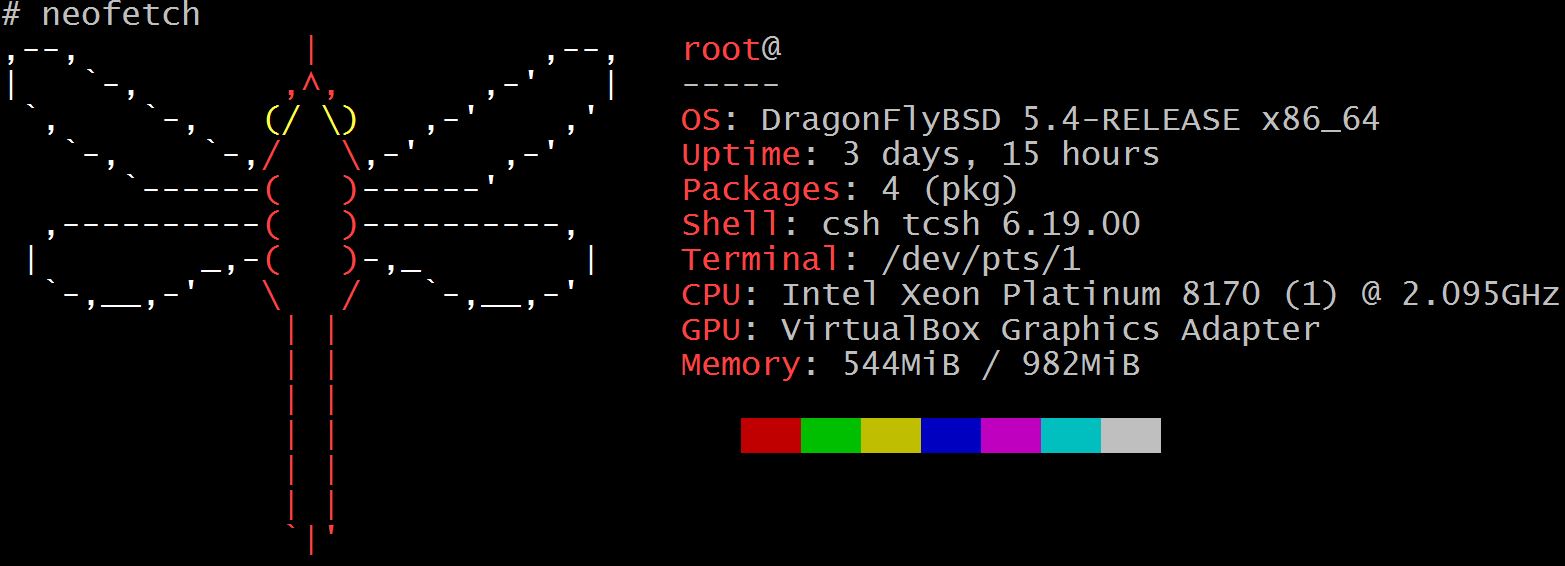

neofetch

如何应对焦虑

最近无聊的时候看了看《士兵突击》,其中袁朗的一句话令我印象深刻:“我喜欢不焦虑的人”。的确,生活在现代社会的人很容易产生焦虑。就拿我自己来说,人到中年,工作和生活压力都很大,焦虑自然是难免的,因此如何正确地处理焦虑就显得至关重要。我个人觉得对付焦虑需要做到下面两点:

首先要认识到焦虑对解决问题没有任何帮助,还会让自己烦躁和失去理智。当焦虑的时候,要尝试着让自己平静下来去思考有没有办法改变现状。必要时可以觉得“这些都是命里注定的”,然后去接受这一切。

另外不要老和别人比较。周围的人生活的比你好也好,差也罢,都和你没什么关系。不要把时间和精力浪费在和自己无关的事情上,要让自己充实起来,利用一切可以利用的时间,把自己生活填满:锻炼,学习新东西,看书,照顾家人,等等。这样你就可以活在当下,忘掉焦虑。

当然,什么事情都是说起来容易,做起来难。我自己也常常焦虑。但是这个事情只能靠自己解决,别人谁也帮不上。所以只能尽自己的努力去摆脱焦虑吧。

GKrellM

如何度量系统的时钟频率

Daniel Lemire的Measuring the system clock frequency using loops (Intel and ARM)讲述了如何利用汇编指令来度量系统的时钟频率。以Intel X86处理器为例(ARM平台原理类似):

; initialize 'counter' with the desired number

label:

dec counter ; decrement counter

jnz label ; goes to label if counter is not zero

在实际执行时,现代的Intel X86处理器会把dec和jnz这两条指令“融合”成一条指令,并在一个时钟周期执行完毕。因此只要知道完成一定数量的循环花费了多长时间,就可以计算得出当前系统的时钟频率近似值。

在代码中,Daniel Lemire使用了一种叫做“measure-twice-and-subtract”技巧:假设循环次数是65536,每次实验跑两次。第一次执行65536 * 2次,花费时间是nanoseconds1;第二次执行65536次,花费时间是nanoseconds2。那么我们就得到3个执行65536次数的时间:nanoseconds1 / 2,nanoseconds1 - nanoseconds2和nanoseconds2。这三个时间之间的误差必须小于一个值才认为此次实验结果是有效的:

......

double nanoseconds = (nanoseconds1 - nanoseconds2);

if ((fabs(nanoseconds - nanoseconds1 / 2) > 0.05 * nanoseconds) or

(fabs(nanoseconds - nanoseconds2) > 0.05 * nanoseconds)) {

return 0;

}

......

最后把有效的测量值排序取中位数(median):

......

std::cout << "Got " << freqs.size() << " measures." << std::endl;

std::sort(freqs.begin(),freqs.end());

std::cout << "Median frequency detected: " << freqs[freqs.size() / 2] << " GHz" << std::endl;

......

在我的系统上,lscpu显示的CPU时钟频率:

$ lscpu

......

CPU MHz: 1000.007

CPU max MHz: 3700.0000

CPU min MHz: 1000.0000

......

实际测量结果:

$ ./loop.sh

g++ -O2 -o reportfreq reportfreq.cpp -std=c++11 -Wall -lm

measure using a tight loop:

Got 9544 measures.

Median frequency detected: 3.39196 GHz

measure using an unrolled loop:

Got 9591 measures.

Median frequency detected: 3.39231 GHz

measure using a tight loop:

Got 9553 measures.

Median frequency detected: 3.39196 GHz

measure using an unrolled loop:

Got 9511 measures.

Median frequency detected: 3.39231 GHz

measure using a tight loop:

Got 9589 measures.

Median frequency detected: 3.39213 GHz

measure using an unrolled loop:

Got 9540 measures.

Median frequency detected: 3.39196 GHz

.......

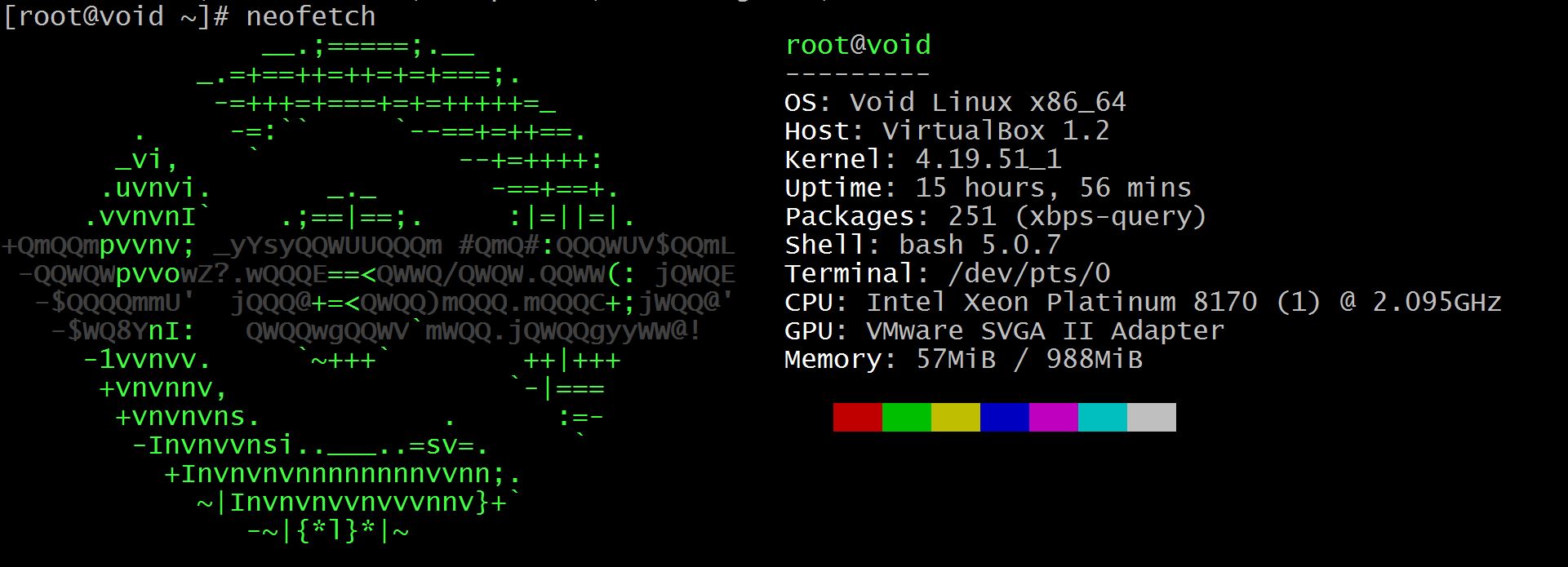

Void Linux 初体验

最近要做一些Linux kernel相关的测试工作,为了避免影响到其它同事,决定使用虚拟机。过去的3年一直使用Arch Linux,有点烦了,所以打算尝试一个新的发行版(前提必须也是“滚动发行”(rolling-release)的),这次我选择了Void Linux。

Void Linux吸引我的地方在于它的“特立独行”:

(1)没有使用现在主流的systemd,而是用runit来作为初始化系统,这更接近传统的Unix方式;

(2)使用OpenBSD团队开发的LibreSSL而不是OpenSSL。

另外,Void Linux与Arch Linux的一个重要区别在于内核的版本:Arch Linux选择stable版本(现在是5.1.5),而Void Linux则是long-term(4.19.46)。

经过一周的使用,总体来说Void Linux感觉不错,能够满足我的开发需求。感兴趣的朋友也可以尝试一下。

闲侃两句“白板面试”

“白板面试”是一个老生常谈的问题了,关于它的利弊仁者见仁,智者见智。下面这些仅代表我个人观点:

(1)像Google,Facebook,Amazon这些公司对“白板面试”还是有很高要求的。如果目标是进入这些公司,那么没其它的选择,就得接受现实,刻苦“刷题”。

(2)有些公司连一句话都不和你说,直接发给你一个链接让你先做题,这种做法很不尊重人。

(3)安排“白板面试”的公司应该想清楚自己的需求是什么,可以从“白板面试”中考察到应聘者那些技能,“白板面试”是否真的适合自己公司?

(4)花时间练习“白板面试”对工程师有好处,但是“性价比”不太高。

(5)我参加过一些“白板面试”,失败居多。我记性不好,很多算法一段时间不用就忘了。在工作中我遇到忘记或不懂的算法就上网查,好像也从来没因为这个影响工作。

总结一下,如果“白板面试”是你心仪公司的“硬性要求”,或者你觉得花在“刷题”上的时间值得,那就多多练习;否则还是把时间用在其它的事情上面,比如读一本小说。

关于编写英文技术教程的一些总结

我从2016年中旬起开始尝试撰写英文技术教程,当时花了小半年写了一本Go语言的入门读物。2017年没动笔;2018年写了三本小册子,内容涉及并发编程,网络通讯等等。今年截至目前又完成一篇有关Linux操作系统性能监控的“小儿书”。现在回想起来,花在编写这些文档上的时间和精力还是相当值得的,我会把这项工作坚持下去。

当初开始这项工作的初衷是我这个人记性不好,学的东西过一段时间就忘了,因此我就打算以笔记的形式保存下来。使用英文的原因除了想锻炼一下自己的英语能力以外,也在想这些文字说不定也可以帮到别人。现在回头看看,收益最多的其实是我自己,比如这一年多来我经常在工作中参考我自己编写的OpenMP手册,:-);此外也很高兴自己的教程有时会被引用为stackoverflow的答案。这项工作的另外一个“副产品”是为了弄懂一个知识点,我常常需要去阅读源码,也就会顺便发现bug并贡献patch,算是为开源社区尽自己一点微薄之力。

接下来我会继续编写一些英文技术教程,因为不是写小说,所以定的目标就是力求做到言简意赅,不拖泥带水,争取让读者们花最短的时间得到最核心的内容。

P.S.,我编写的英文教程链接。