Last week, I began to read the awesome Professional CUDA C Programming, and bumped into the following words in GPU Architecture Overview section:

CUDA employs a Single Instruction Multiple Thread (SIMT) architecture to manage and execute threads in groups of 32 called warps.

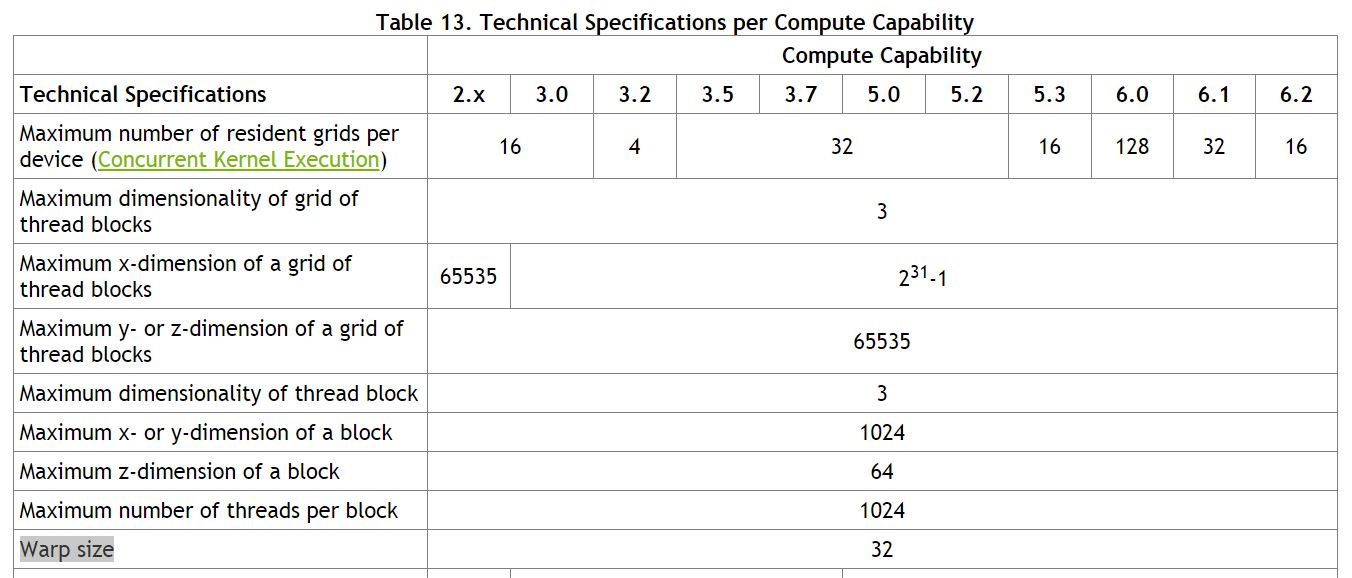

Since this book is published in 2014, I just wonder whether the warp size is still 32 in CUDA no matter the different Compute Capability is. To figure out it, I turn to the official CUDA C Programming Guide, and get the answer from Compute Capability table:

Yep, for all Compute Capabilities, the warp size is always 32.

BTW, you can also use following program to determine the warp size value:

#include <stdio.h>

int main(void) {

cudaDeviceProp deviceProp;

if (cudaSuccess != cudaGetDeviceProperties(&deviceProp, 0)) {

printf("Get device properties failed.\n");

return 1;

} else {

printf("The warp size is %d.\n", deviceProp.warpSize);

return 0;

}

}

The running result in my CUDA box is here:

The warp size is 32.

Thank you for clarifying this point.

Thanks. Hope more clarifications like this