Wireshark‘s tshark program can’t process macOS‘s pcapng file well. E.g.:

$ sudo tcpdump -w foo.pcapng

Password:

tcpdump: data link type PKTAP

tcpdump: listening on pktap, link-type PKTAP (Apple DLT_PKTAP), capture size 262144 bytes

^C24 packets captured

27 packets received by filter

0 packets dropped by kernel

Use tshark to read and write the generated foo.pcapng:

$ tshark -r foo.pcapng -w bar.pcapng

tshark: An error occurred while writing to the file "bar.pcapng": Internal error.

I also met following error before:

$ tshark -r apsd-107.pcapng -w foo.pcapng

tshark: The capture file being read can't be written as a "pcapng" file.

macOS has its own bespoke libpcap and tcpdump, so if the pcapng file is generated by tcpdump, using tcpdump itself to process pcapng file seems the only choice.

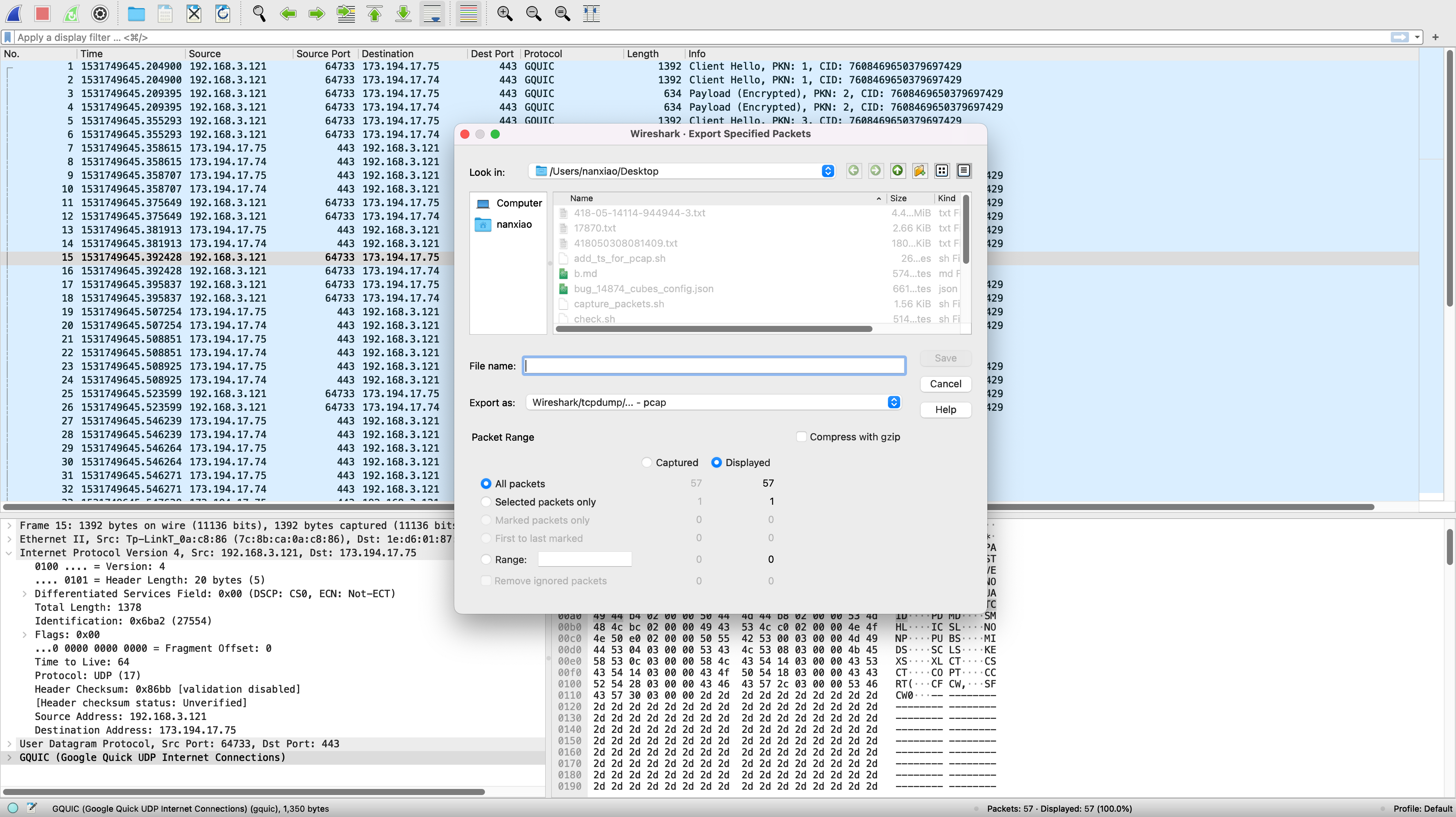

A workaround is if you don’t care about losing information, you can use wireshark to convert the pcapng file to pcap first: