In the past 2 weeks, I was tortured by a nasty bug, yes, a not-always-reproducible, use-third-party-code, parallel-program issue. Thank Goodness! The root cause was found on this Thursday, and I think the whole progress is a perfect experience and tutorial of how to debug parallel program. So I record it here and hope it can give you some tips when facing similar situation.

Background: I am leveraging FHEW to implement some complex calculations and experiments. Now the FHEW only works in single-thread environment, since many functions share the same global variables, like this:

......

double *in;

fftw_complex *out;

fftw_plan plan_fft_forw, plan_fft_back;

void FFTsetup() {

in = (double*) fftw_malloc(sizeof(double) * 2*N);

out = (fftw_complex*) fftw_malloc(sizeof(fftw_complex) * (N + 2));

plan_fft_forw = fftw_plan_dft_r2c_1d(2*N, in, out, FFTW_PATIENT);

plan_fft_back = fftw_plan_dft_c2r_1d(2*N, out, in, FFTW_PATIENT);

}

void FFTforward(Ring_FFT res, const Ring_ModQ val) {

for (int k = 0; k < N; ++k) {

in[k] = (double) (val[k]);

in[k+N] = 0.0;

}

fftw_execute(plan_fft_forw);

for (int k = 0; k < N2; ++k)

res[k] = (double complex) out[2*k+1];

}

.....

So to make it can be used in parallel, I first need to modify it to be used in multi-thread program. This work involves of allocating resources of every thread, adding lock of necessary global variables, etc. The multi-thread FHEW works always OK until I employed it to implement a complicated function. At most times the result was correct except some rare occasions. So I began the tough debugging work.

(1) Log. Undoubtedly, the log is the most effective one in all debugging tools. I tried to add as many traces as I can. An important attention you must pay is now that multiple threads are running simultaneously, you must identify the logs are from which thread. For example, if you use OpenMP, you can use following code to differentiate thread IDs:

......

printf("Thread(%d/%d) ......", omp_get_thread_num(), omp_get_num_threads(), ......);

......

After analyzing the log, a function was spotted, but it did noting but use 2 consecutive HomNAND to implement the AND operations (You should consider HomNAND is just a NAND gate in logical circuit, and use two NAND gates can realize AND gate.). According to AND gate truth-table, when two inputs are 0, the output should be 0 too, but in my program, the result is 1, which causes the final error.

(2) Simplify the program and try to find the reproducible condition. Since the bug was related to HomNAND and my original program was a little complicated, I needed to do some simple tests and try to find the reproducible condition. I designed 3 experiments:

a) Use original single-thread FHEW, and execute one HomNAND continuously:

for (;;) {

......

HomNAND();

......

}

b) Use modified multi-thread FHEW, and spawn 8 threads. Every thread run one HomNAND in dead-loop:

#pragma omp parallel for

for (int loop = 0; loop < 8; loop++) {

......

HomNAND();

......

}

c) Use modified multi-thread FHEW, and spawn 8 threads. Every thread run two HomNANDs to achieve AND function in dead-loop:

#pragma omp parallel for

for (int loop = 0; loop < 8; loop++) {

......

HomNAND()

HomNAND();

......

}

After testing, I found case a) and b) were always OK, while c) would generate wrong results after running 1 or 2 hours.

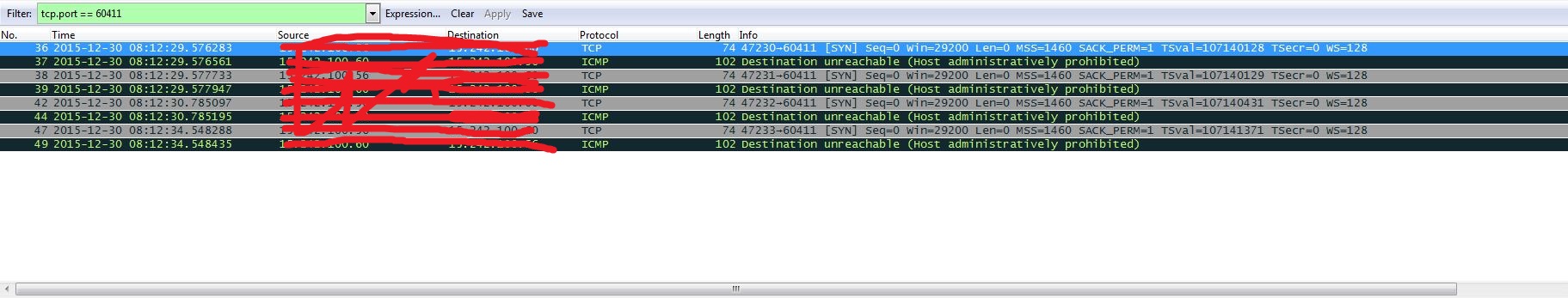

(3) Now the thing became intricate. Because I modified the FHEW, and FHEW also used FFTW, it was not an easy task to locate where the problem was. Additionally, the thread-safe usage of FFTW also involved many discussions (like this) and pitfalls (like this), so I resort the authors of FFTW to make sure my code is not wrong.

(4) Back to the experiments, I suddenly found that I have tested single-thread-single-HomNAND, multiple-thread-single-HomNAND, multiple-thread-two-HomNAND cases, but how about single-thread-two-HomNAND? If single-thread-two-HomNAND case can fail, it can prove the my modification code was innocent, and the problem should reside on the original FHEW code:

for (;;) {

......

HomNAND();

HomNAND();

......

}

Yeah, I was very lucky! After one hour, the error occurred. The murder was found, so the next step was to seek solution with the author.

Based on this experience, I think analyzing log and simplifying test model are very important techniques during resolving parallel program bugs. Hope everyone can improve debugging ability, happy debugging!