Let’s see a Go web program:

package main

import (

"fmt"

"io"

"io/ioutil"

"net/http"

"os"

"time"

)

func main() {

for {

resp, err := http.Get("https://www.google.com/")

if err != nil {

fmt.Fprintf(os.Stderr, "fetch: %v\n", err)

os.Exit(1)

}

_, err = io.Copy(ioutil.Discard, resp.Body)

resp.Body.Close()

if err != nil {

fmt.Fprintf(os.Stderr, "fetch: reading: %v\n", err)

os.Exit(1)

}

time.Sleep(45 * time.Second)

}

}

The logic of above application is not hard, just retrieve information from the specified Website URL. I am a layman of web development, and think the HTTP communication should be “short-lived”, which means when HTTPclient issues a request, it will start a TCP connection with HTTP server, once the client receives the response, this session should end, and the TCP connection should be demolished. But is the fact really like this? I use lsof command to check my guess:

# lsof -P -n -p 907

......

fetch 907 root 3u IPv4 0xfffff80013677810 0t0 TCP 192.168.80.129:32618->xxx.xxx.xxx.xxx:8080 (ESTABLISHED)

......

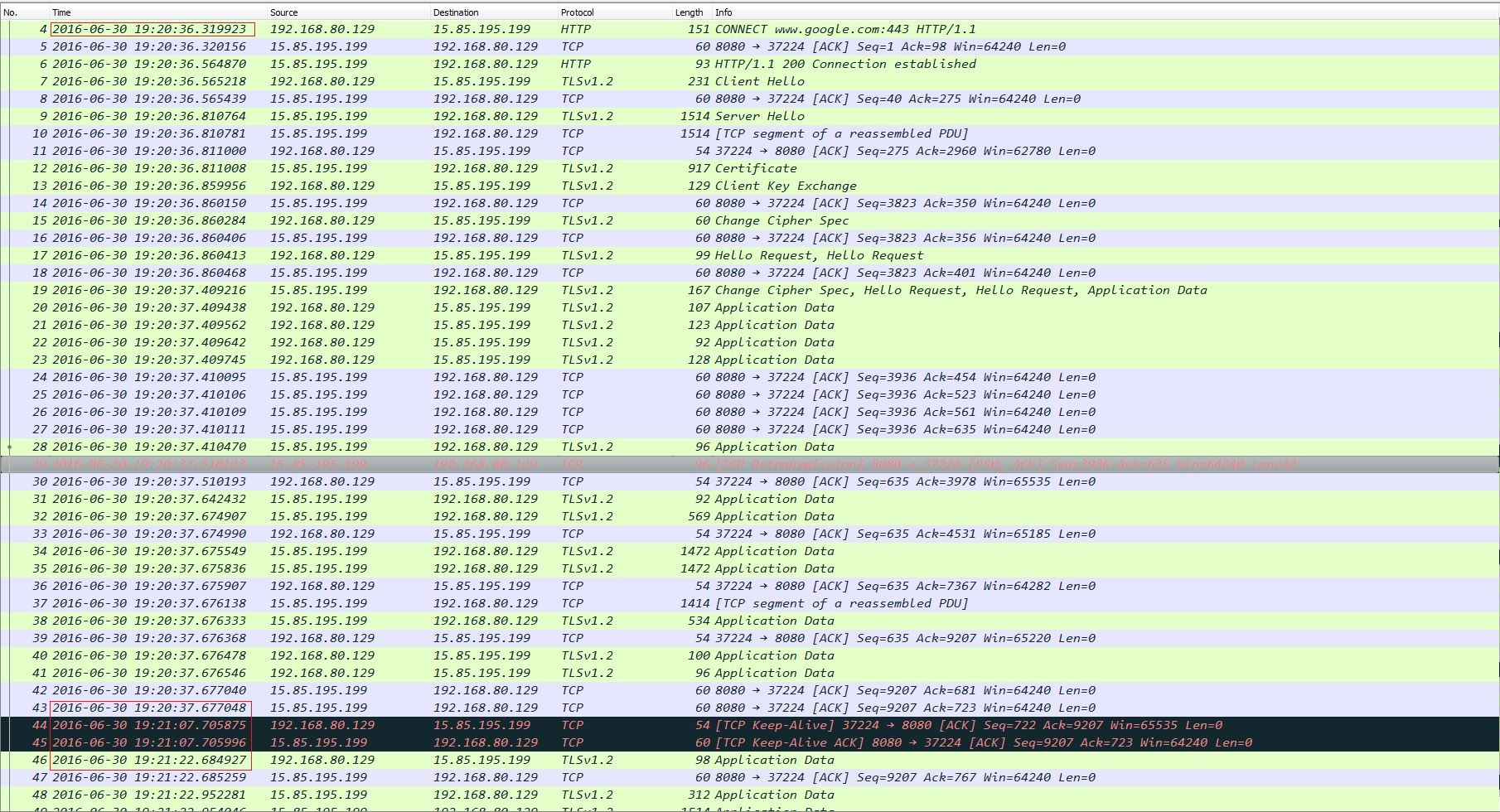

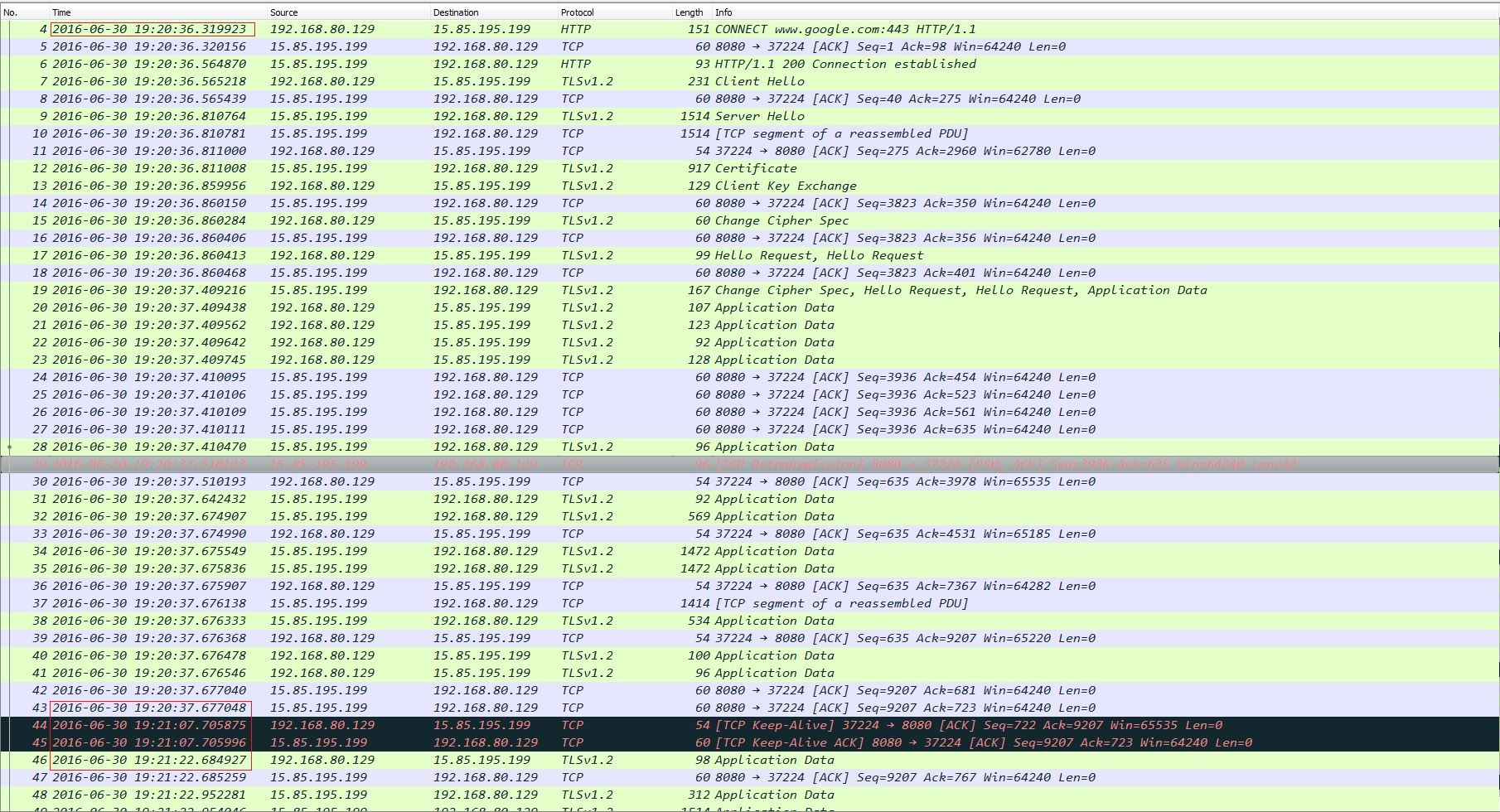

Oh! My assumption is wrong, and there is a “long-lived” TCP connection. Why does this happen? When I come across the network related troubles, I will always seek help from tcpdump and wireshark and try to capture packets for analysis. This time should not be exception, and the communication flow is as the following picture:

(1) The start number of packet is 4, that’s because the first 3 packets are TCP handshake, and it is safe to ignore them;

(2) Packet 4 ~ 43 is the first HTTP GET flow, and this process lasts about 2 seconds, and ends at 19:20:37;

(3) Then after half a minute, at 19:21:07, there occurs a TCP keep-alive packet on the wire. Oh dear! The root cause has been found! Although the HTTP session is over, the TCP connection still exists and uses keep-alive mechanism to make the TCP passway alive, so this TCP route can be reused by following HTTP messages;

(4) As expected, 15 seconds later, a new HTTP session begins at packet 46, which is exactly 45 seconds pass after the first HTTPconversation.

That’s the effect of TCP keep-alive, which keeps the TCP connection alive and can be reused by following HTTP sessions. Another thing you should pay attention of reusing connection is the Response.Body must be read to completion and closed (Please refer here):

type Response struct {

......

// Body represents the response body.

//

// The http Client and Transport guarantee that Body is always

// non-nil, even on responses without a body or responses with

// a zero-length body. It is the caller's responsibility to

// close Body. The default HTTP client's Transport does not

// attempt to reuse HTTP/1.0 or HTTP/1.1 TCP connections

// ("keep-alive") unless the Body is read to completion and is

// closed.

//

// The Body is automatically dechunked if the server replied

// with a "chunked" Transfer-Encoding.

Body io.ReadCloser

......

}

As an example, modify the above program as follows:

package main

import (

"fmt"

"io"

"io/ioutil"

"net/http"

"os"

"time"

)

func closeResp(resp *http.Response) {

_, err := io.Copy(ioutil.Discard, resp.Body)

resp.Body.Close()

if err != nil {

fmt.Fprintf(os.Stderr, "fetch: reading: %v\n", err)

os.Exit(1)

}

}

func main() {

for {

resp1, err := http.Get("https://www.google.com/")

if err != nil {

fmt.Fprintf(os.Stderr, "fetch: %v\n", err)

os.Exit(1)

}

resp2, err := http.Get("https://www.facebook.com/")

if err != nil {

fmt.Fprintf(os.Stderr, "fetch: %v\n", err)

os.Exit(1)

}

time.Sleep(45 * time.Second)

for _, v := range []*http.Response{resp1, resp2} {

closeResp(v)

}

}

}

During running it, You will see 2 TCP connections, not 1:

# lsof -P -n -p 1982

......

fetch 1982 root 3u IPv4 0xfffff80013677810 0t0 TCP 192.168.80.129:43793->xxx.xxx.xxx.xxx:8080 (ESTABLISHED)

......

fetch 1982 root 6u IPv4 0xfffff80013677000 0t0 TCP 192.168.80.129:12105->xxx.xxx.xxx.xxx:8080 (ESTABLISHED)

If you call closeResp function before issuing new HTTP request, the TCP connection can be reused:

package main

import (

"fmt"

"io"

"io/ioutil"

"net/http"

"os"

"time"

)

func closeResp(resp *http.Response) {

_, err := io.Copy(ioutil.Discard, resp.Body)

resp.Body.Close()

if err != nil {

fmt.Fprintf(os.Stderr, "fetch: reading: %v\n", err)

os.Exit(1)

}

}

func main() {

for {

resp1, err := http.Get("https://www.google.com/")

if err != nil {

fmt.Fprintf(os.Stderr, "fetch: %v\n", err)

os.Exit(1)

}

closeResp(resp1)

time.Sleep(45 * time.Second)

resp2, err := http.Get("https://www.facebook.com/")

if err != nil {

fmt.Fprintf(os.Stderr, "fetch: %v\n", err)

os.Exit(1)

}

closeResp(resp2)

}

}

P.S., the full code is here.

References:

Package http;

Reusing http connections in Golang;

Is HTTP Shortlived?.